Deploy Kubernetes and Docker from Scratch with Flannel Networking

來解釋一下如何安裝Kubernetes,並launch一個Container,最後我們將Networking改成Flannel。

套件安裝與環境設定

首先先安裝Docker等,相關的套件。

$ apt-get update

$ apt-get install ssh

$ apt-get install docker.io

$ apt-get install curl打通ssh。

ssh-keygen -t rsassh-copy-id -i /root/.ssh/id_rsa.pub 127.0.0.1cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys$ ssh root@127.0.0.1

exit下載Kubernetes v1.0.1版。

$ wget https://github.com/GoogleCloudPlatform/kubernetes/releases/download/v1.0.1/kubernetes.tar.gz

tar -xvf kubernetes.tar.gz

All-in-one 套件執行

開始進行安裝了。

cd kubernetes/cluster/ubuntu./build.sh設定Config檔。

cd

vi kubernetes/cluster/ubuntu/config-default.sh

export nodes="root@127.0.0.1"

export roles="ai"

export NUM_MINIONS=${NUM_MINIONS:-1}將所有執行檔叫起來。

$ cd kubernetes/cluster

$ KUBERNETES_PROVIDER=ubuntu ./kube-up.sh將執行檔export給系統,可再任意地方執行kubernetes的執行檔,並用kubectl測試一下。

export PATH=$PATH:~/kubernetes/cluster/ubuntu/binaries

kubectl --help看一下,目前安裝的狀態

kubectl get nodeskube-UI的安裝,也可以不用。

$ kubectl create -f addons/kube-ui/kube-ui-rc.yaml --namespace=kube-system

$ kubectl create -f addons/kube-ui/kube-ui-svc.yaml --namespace=kube-system目前單機已經安裝完了,因此,可以執行以下命令將wordpress container叫起來。

如果你想換成Flannel的網路架構,可以先跳過接下來的步驟,直接去Flannel的安裝。

kubectl run wordpress --image=tutum/wordpress --port=80 --hostport=81用kubectl查看,Container是否啟動,需要等個一分鐘左右,因為要從dockerhub下載檔案。

kubectl get pods

kubectl get rc也可以用docker指令查看。

docker ps看一下網路架構,與Docker本身的網路架構是一樣的。

#ifconfig

docker0 Link encap:Ethernet HWaddr 56:84:7a:fe:97:99

inet addr:172.17.42.1 Bcast:0.0.0.0 Mask:255.255.0.0

inet6 addr: fe80::5484:7aff:fefe:9799/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:16 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1072 (1.0 KB) TX bytes:648 (648.0 B)

eth0 Link encap:Ethernet HWaddr 00:0c:29:ad:f5:61

inet addr:172.16.235.128 Bcast:172.16.235.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fead:f561/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:300420 errors:0 dropped:0 overruns:0 frame:0

TX packets:127648 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:437238373 (437.2 MB) TX bytes:8566239 (8.5 MB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:522030 errors:0 dropped:0 overruns:0 frame:0

TX packets:522030 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:207725326 (207.7 MB) TX bytes:207725326 (207.7 MB)

veth0ef8f9d Link encap:Ethernet HWaddr 26:f0:39:f9:65:3a

inet6 addr: fe80::24f0:39ff:fef9:653a/64 Scope:Link

UP BROADCAST RUNNING MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:24 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:648 (648.0 B) TX bytes:1944 (1.9 KB)

vetha2a43fc Link encap:Ethernet HWaddr d2:ad:ea:e1:ff:7f

inet6 addr: fe80::d0ad:eaff:fee1:ff7f/64 Scope:Link

UP BROADCAST RUNNING MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:648 (648.0 B) TX bytes:648 (648.0 B)

連到Container看一下網路IP。

root@wordpress-j0f8t:/# ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:ac:11:00:02

inet addr:172.17.0.2 Bcast:0.0.0.0 Mask:255.255.0.0

inet6 addr: fe80::42:acff:fe11:2/64 Scope:Link

UP BROADCAST RUNNING MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:648 (648.0 B) TX bytes:648 (648.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

ETCD在Kubernetes有著非常重要的位置,看一下etcdctl內容。

root@kubernetes:~# /opt/bin/etcdctl ls

/registryroot@kubernetes:~# ps aux|grep kube

root 4763 0.0 0.1 8392 1972 ? Ssl 13:20 0:00 /kube-ui

root 10628 3.2 3.6 248140 36140 ? Ssl 13:35 0:03 /opt/bin/kubelet --address=0.0.0.0 --port=10250 --hostname_override=127.0.0.1 --api_servers=http://127.0.0.1:8080 --logtostderr=true --cluster_dns=192.168.3.10 --cluster_domain=cluster.local

root 10629 0.4 1.2 16276 12500 ? Ssl 13:35 0:00 /opt/bin/kube-scheduler --logtostderr=true --master=127.0.0.1:8080

root 10631 1.2 3.9 48896 39936 ? Ssl 13:35 0:01 /opt/bin/kube-apiserver --address=0.0.0.0 --port=8080 --etcd_servers=http://127.0.0.1:4001 --logtostderr=true --service-cluster-ip-range=192.168.3.0/24 --admission_control=NamespaceLifecycle,NamespaceAutoProvision,LimitRanger,ServiceAccount,ResourceQuota --service_account_key_file=/tmp/kube-serviceaccount.key --service_account_lookup=false

root 10632 0.6 1.9 25556 19248 ? Ssl 13:35 0:00 /opt/bin/kube-controller-manager --master=127.0.0.1:8080 --service_account_private_key_file=/tmp/kube-serviceaccount.key --logtostderr=true

root 10633 0.2 1.4 203044 14244 ? Ssl 13:35 0:00 /opt/bin/kube-proxy --master=http://127.0.0.1:8080 --logtostderr=true

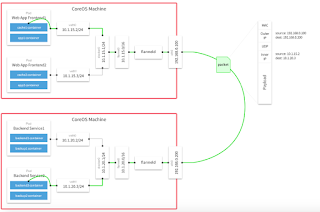

Flannel的安裝

Let's change it to Flannel network

vim /etc/default/flanneld

FLANNEL_OPTS="--etcd-endpoints=http://127.0.0.1:2379"service flanneld stop

service docker stop

service kubelet stop

service kube-proxy stopapt-get install -y bridge-utilsip link set dev docker0 down

brctl delbr docker0/opt/bin/etcdctl rm --recursive /coreos.com/network

/opt/bin/etcdctl set /coreos.com/network/config '{ "Network": "172.17.0.0/16" }'service flanneld startYou will see /run/flannel/subnet.env

ls /run/flannel/subnet.envand modify /etc/default/docker

vim /etc/default/docker

. /run/flannel/subnet.env

DOCKER_OPTS="--bip=${FLANNEL_SUBNET} --mtu=${FLANNEL_MTU}"where the /run/flannel/subnet.env file shown as

vim /run/flannel/subnet.env

FLANNEL_SUBNET=172.17.16.1/24

FLANNEL_MTU=1472

FLANNEL_IPMASQ=falseservice docker start

service kubelet start

service kube-proxy startkubectl run wordpress --image=tutum/wordpress --port=80 --hostport=81kubectl get pods

kubectl get rc

docker ps -aAfter 1 min, you will see the result that the VM's been launched.

root@kubernetes:~/kubernetes/cluster# kubectl get pods

NAME READY REASON RESTARTS AGE

wordpress-gp4cl 1/1 Running 0 7mYou wil see the Flannel Network here.

root@kubernetes:~/kubernetes/cluster# ifconfig

docker0 Link encap:Ethernet HWaddr 56:84:7a:fe:97:99

inet addr:172.17.16.1 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::5484:7aff:fefe:9799/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1472 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:536 (536.0 B) TX bytes:648 (648.0 B)

eth0 Link encap:Ethernet HWaddr 00:0c:29:ad:f5:61

inet addr:172.16.235.128 Bcast:172.16.235.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fead:f561/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:297104 errors:0 dropped:0 overruns:0 frame:0

TX packets:118915 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:435040989 (435.0 MB) TX bytes:7818950 (7.8 MB)

flannel0 Link encap:UNSPEC HWaddr 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00

inet addr:172.17.16.0 P-t-P:172.17.16.0 Mask:255.255.0.0

UP POINTOPOINT RUNNING MTU:1472 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:500

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:87012 errors:0 dropped:0 overruns:0 frame:0

TX packets:87012 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:184668449 (184.6 MB) TX bytes:184668449 (184.6 MB)

vethbd09c72 Link encap:Ethernet HWaddr 96:0a:11:72:07:77

inet6 addr: fe80::940a:11ff:fe72:777/64 Scope:Link

UP BROADCAST RUNNING MTU:1472 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:16 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:648 (648.0 B) TX bytes:1296 (1.2 KB)

From Official Site, you wiil see the result is right.

However, we only have one node here.

Also see the container's IP, and login to the container.

root@wordpress-gp4cl:/# ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:ac:11:10:02

inet addr:172.17.16.2 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::42:acff:fe11:1002/64 Scope:Link

UP BROADCAST RUNNING MTU:1472 Metric:1

RX packets:16 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1296 (1.2 KB) TX bytes:648 (648.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)網路問題

在Container內,ping www.google.com,我發現不通,查了一下container內的nameserver

vim /etc/resolv.conf

nameserver 192.168.3.10

nameserver 172.16.235.2

search default.svc.cluster.local svc.cluster.local cluster.local localdomaindelete 192.168.3.10 nameserver

vim /etc/resolv.conf

nameserver 172.16.235.2

search default.svc.cluster.local svc.cluster.local cluster.local localdomain再ping一下通了

oot@wordpress-gp4cl:/# ping www.google.com

PING www.google.com (64.233.188.103) 56(84) bytes of data.

64 bytes from tk-in-f103.1e100.net (64.233.188.103): icmp_seq=1 ttl=127 time=10.2 ms

64 bytes from tk-in-f103.1e100.net (64.233.188.103): icmp_seq=2 ttl=127 time=9.81 ms發現了deploy config有問題,下次可以修改一下。

root@kubernetes:~/kubernetes/cluster# grep -R 192.168.3 *

ubuntu/config-default.sh:export SERVICE_CLUSTER_IP_RANGE=${SERVICE_CLUSTER_IP_RANGE:-192.168.3.0/24} # formerly PORTAL_NET

ubuntu/config-default.sh:DNS_SERVER_IP=${DNS_SERVER_IP:-"192.168.3.10"}改成8.8.8.8試試看,這部分是手動在container中改的,下次部署可以改上述的檔案ubuntu/config-default.sh中DNSSERVERIP.

nameserver 8.8.8.8

search default.svc.cluster.local svc.cluster.local cluster.local localdomainping google again, it works well.

root@wordpress-gp4cl:/# ping www.google.com

PING www.google.com (74.125.203.106) 56(84) bytes of data.

64 bytes from th-in-f106.1e100.net (74.125.203.106): icmp_seq=1 ttl=127 time=11.4 ms

64 bytes from th-in-f106.1e100.net (74.125.203.106): icmp_seq=2 ttl=127 time=12.5 ms

64 bytes from th-in-f106.1e100.net (74.125.203.106): icmp_seq=3 ttl=127 time=15.0 msTo check the detailed infomation via curl.

root@kubernetes:/# curl -s http://localhost:4001/v2/keys/coreos.com/network/subnets | python -mjson.tool

{

"action": "get",

"node": {

"createdIndex": 37,

"dir": true,

"key": "/coreos.com/network/subnets",

"modifiedIndex": 37,

"nodes": [

{

"createdIndex": 37,

"expiration": "2016-03-30T07:37:28.947871304Z",

"key": "/coreos.com/network/subnets/172.17.16.0-24",

"modifiedIndex": 37,

"ttl": 83666,

"value": "{\"PublicIP\":\"172.16.235.128\"}"

}

]

}

}下一篇我們可以來看看VXlan的模式如何開啟。